About me

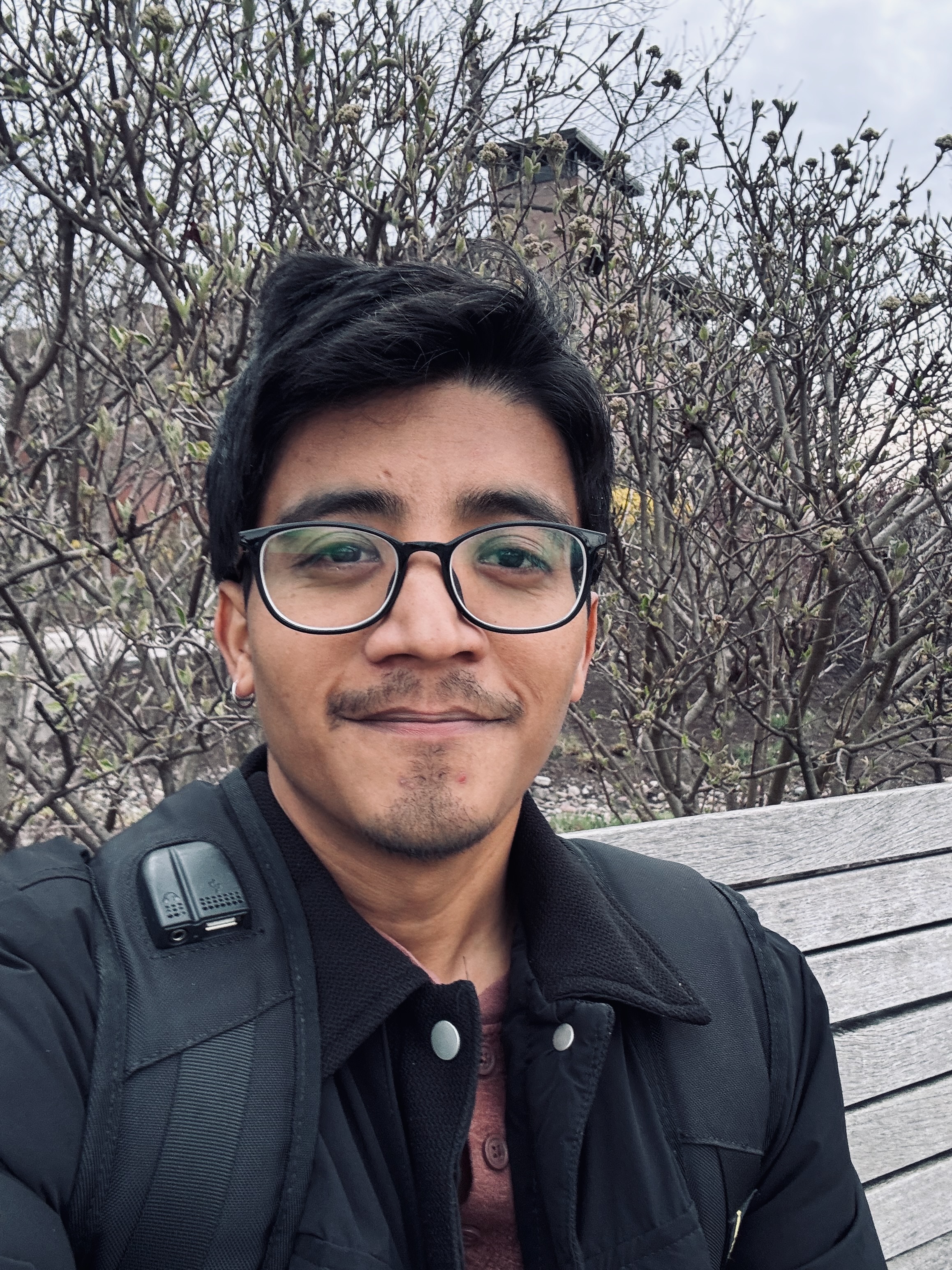

My name is Dipkamal Bhusal, and I am currently a fifth-year Computer Science Ph.D. candidate at Golisano College of Computing and Information Sciences, Rochester Institute of Technology (RIT). My research focuses on reliable deep learning, with a particular emphasis on explainable machine learning.

Prior to my PhD, I obtained my Master's in Information Engineering at Pulchowk Campus, Tribhuvan University (Nepal) in 2021 with a core focus on machine learning for medical diagnosis. I graduated as an electronics and communication engineer from Pulchowk Campus, Tribhuvan University (Nepal) in 2016.

From 2016-2021, I was involved as a co-founder of Paaila Technology, a robotics and AI startup based in Kathmandu, that worked on the development of service robots, and chatbots. Throughout my tenure, I played multifaceted roles as a developer, project manager, and director. You can read more about Paaila Technology here. Before pursuing my PhD, I was also involved as a lecturer of computer science at IIMS College, Kathmandu from 2020 to 2021.

In my free time, I am passionate about music—I play guitar and enjoy writing songs whenever I get the chance. I love exploring films from around the world, always keen on discovering hidden gems beyond mainstream hits.

Select Publications

Ayushi Mehrotra, Dipkamal Bhusal (Equal Contribution), Nidhi Rastogi

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (2026)

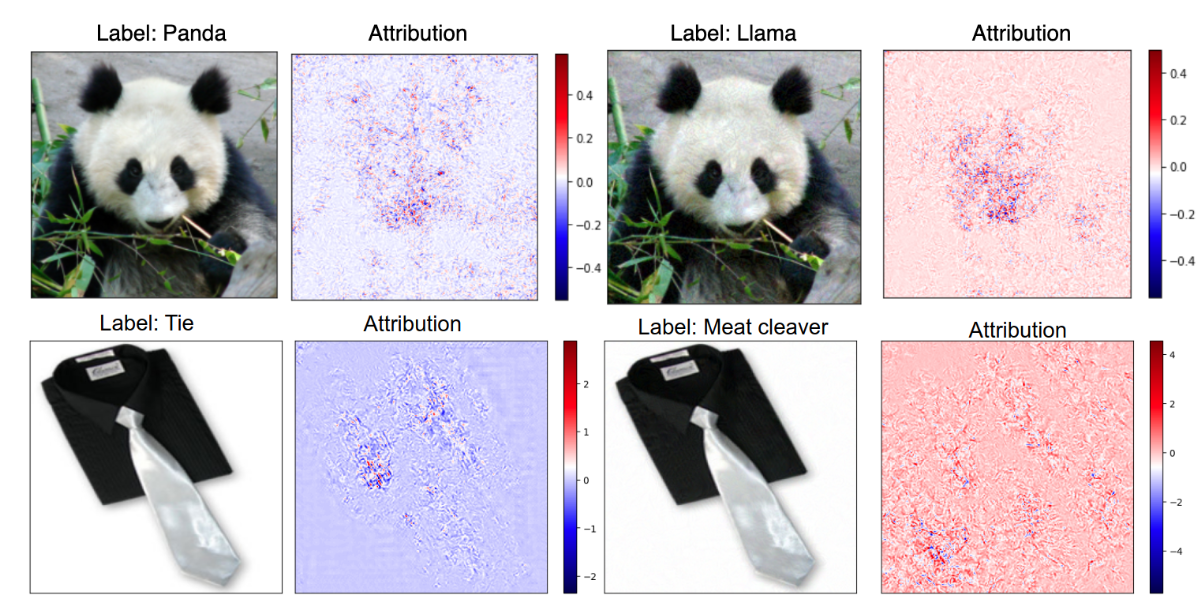

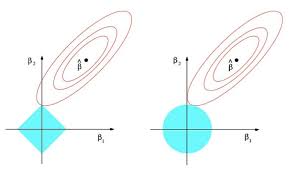

I mentored Ayushi on developing a new attribution framework designed to uncover feature interactions in image classifiers. Our method "𝗛-𝗦𝗲𝘁𝘀" discovers and attributes higher-order interactions by leveraging pixel-level Hessian computations grounded in spatial priors from SAM. Our approach ensures post-hoc explanations satisfy core attribution axioms, resulting in saliency maps that are sparser and more faithful than existing methods.

Paper (Coming Soon)| Code (Coming Soon)

Dipkamal Bhusal, Michael Clifford, Sara Rampazzi, Nidhi Rastogi

40th Annual Conference on Neural Information Processing Systems (NeurIPS) 2025

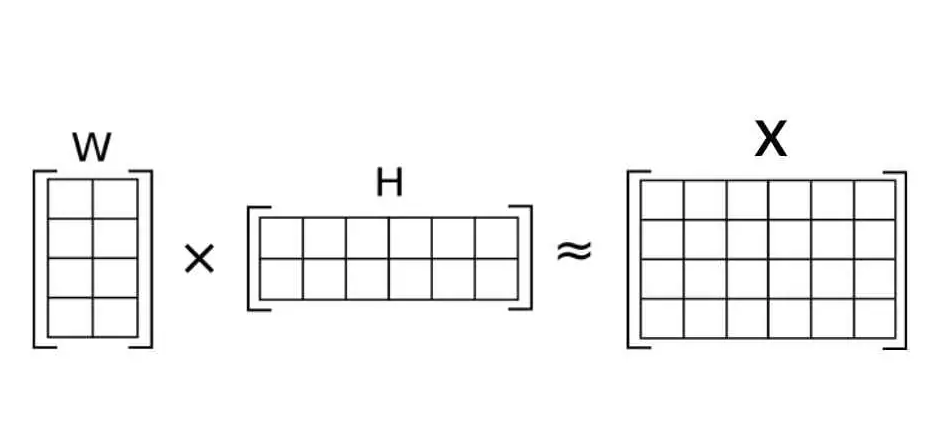

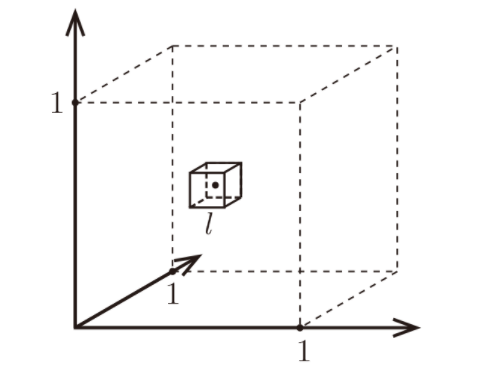

In this work, we propose FACE (Faithful Automatic Concept Extraction), a novel framework that augments Non-negative Matrix Factorization (NMF) with a Kullback-Leibler (KL) divergence regularization term to ensure alignment between the model’s original and concept-based predictions. Unlike prior methods that operate solely on encoder activations, FACE incorporates classifier supervision during concept learning, enforcing predictive consistency and enabling faithful explanations.

Ryan L. Yang, Dipkamal Bhusal (Equal Contribution), Nidhi Rastogi

First Workshop on CogInterp: Interpreting Cognition in Deep Learning Models (NeurIPS 2025)

In this work, I mentored Ryan Yang, an undergraduate from Brown University who joined RIT for summer research. This project tackles a core challenge in deep learning interpretability, ensuring models are "right for the right reasons", by introducing a scalable framework that uses a vision-language model to extract semantic attention, training the model to ground its predictions in the correct evidence.

Ayushi Mehrotra, Derek Peng, Dipkamal Bhusal (Mentorship), Nidhi Rastogi

Workshop: Reliable ML from Unreliable Data. (NeurIPS 2025)

In this work, I mentored Ayushi and Derek, to design a patch agnostic defense against adversarial patch attack that leverages concept-based explanations to identify and suppress the most influential concept activation vectors, thereby neutralizing patch effects without explicit detection. Our method achieves higher robust and clean accuracy than the state-of-the-art PatchCleanser.

Sanish Suwal, Dipkamal Bhusal (Equal Contribution), Michael Clifford, Nidhi Rastogi

Workshop: First Workshop on CogInterp: Interpreting Cognition in Deep Learning Models. (NeurIPS 2025)

In this work, I mentored Sanish where we investigated how magnitude-based pruning followed by fine-tuning affects both low-level saliency maps and high-level concept representations.

Dipkamal Bhusal, Md Tanvirul Alam, Le Nguyen, Ashim Mahara, Zachary Lightcap, Rodney, Romy, Grace, Benjamin, Nidhi Rastogi

40th Annual Computer Security Applications Conference (ACSAC) 2024

Large Language Models (LLMs) have demonstrated potential in cybersecurity applications but suffer from hallucinations and a lack of truthfulness. Existing benchmarks provide general evaluations but do not sufficiently address the practical and applied aspects of cybersecurity-specific tasks. To address this gap, we introduce the SECURE (Security Extraction, Understanding & Reasoning Evaluation), a benchmark designed to assess LLMs performance in realistic cybersecurity scenarios.

Md Tanvirul Alam, Dipkamal Bhusal (Equal Contribution), Le Nguyen, Nidhi Rastogi

39th Annual Conference on Neural Information Processing Systems (NeurIPS) 2024

We extend the knowledge intensive LLM evaluation framework proposed in SECURE and evaluate LLMs in CTI-specific tasks. Cyber threat intelligence (CTI) is crucial in today’s cybersecurity landscape, providing essential insights to understand and mitigate the ever-evolving cyber threats. CTIBench is a benchmark designed to assess LLMs’ performance in CTI applications.

Dipkamal Bhusal, Md Tanvirul Alam, Monish K. , Michael Clifford, Sara Rampazzi, Nidhi Rastogi

9th IEEE European Symposium on Security and Privacy (EuroS&P) 2024

We develop a practical method for utilizing sensitivity of model prediction and feature attribution to detect adversarial samples. Our method, PASA, requires the computation of two test statistics using model prediction and feature attribution and can reliably detect adversarial samples using thresholds learned from benign samples.

Dipkamal Bhusal, Rosalyn Shin, Ajay Ashok Shewale, Monish Kumar Manikya Veerabhadran, Michael Clifford, Sara Rampazzi, Nidhi Rastogi

18th International Conference on Availability, Reliability and Security(ARES) 2023

This paper provides a comprehensive analysis of explainable methods and demonstrates their efficacy in three distinct security applications: anomaly detection using system logs, malware prediction, and detection of adversarial images. Our quantitative and qualitative analysis reveals serious limitations and concerns in state-of-the-art explanation methods in all three applications.

Blogs

A summary of interesting papers I have come across. I will try to update this page frequently.

A note on understanding non-negative matrix factorization.